Plan & Design

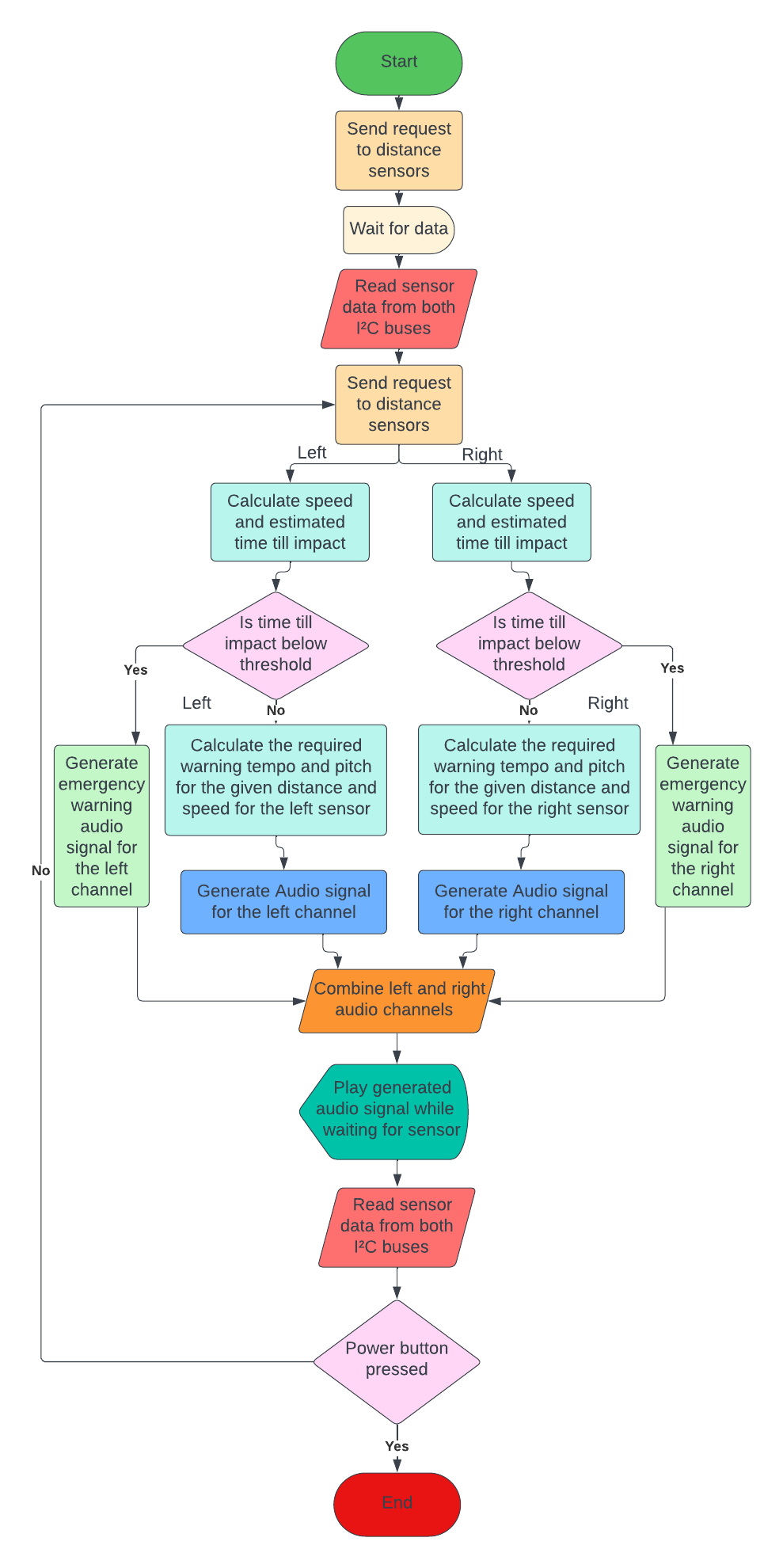

A flowchart/architecture diagram to show how the project will work.

A clear, detailed description of the project.

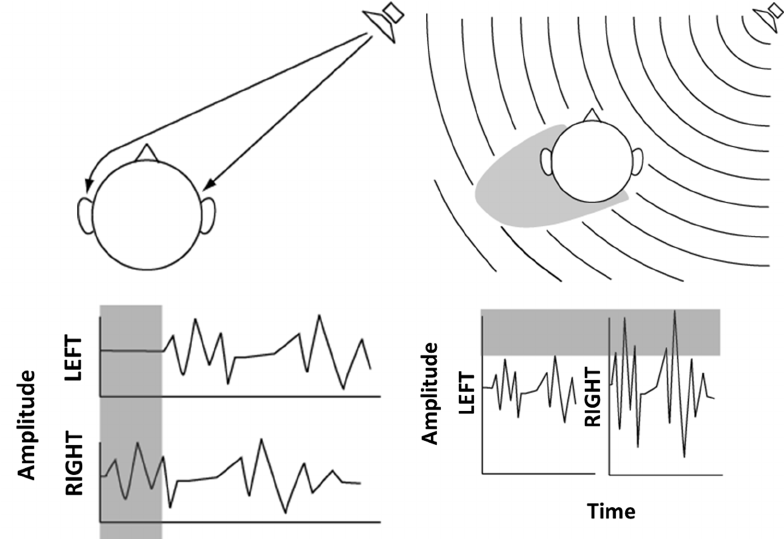

The project is designed to assist people with visual impairements or total blindness. Because most solutions on the market are designed for outdoor use or rely heavily on intensive previous setup (such as setting up audio tags so the user can navigate their belongings) this project is meant to aid the visually impaired in their day to day life at home. By helping them navigate their house quicker and safer. Using an array of precise laser distance sensors mounted around the users wrist the device is able to warn the user of the distance and direction of an obstacle using stereo sound. Using a pair of Bluetooth headphones the device plays a tone in both left and right ears varying in pitch volume and tempo according to the time and velocity until the user will hit an obstacle at. It's wrist mounted design allows for non intrusive operation and it's sophisticated and finely tuned warning system can help the user avoid obstacles and move around with more confidence improving their wellbeing and autonomy.

A description of the technologies you will use and their role within your project.

The device has undergone much iteration on the methods through which it determines distance from it's environement. Having transitioned from using ultrasonic sensors for measuring distances to infrared Lidar sensors. The former relies on sound waves reflecting off of the environement back to the device then the microcontroller measures the time between sending and receiving the reflected wave. Similarily the new LiDar sensors rely and sending a microsecond laser pulse and measuring the time for the wavefront to return.

ㅤ

2.After studying the methods in which the brain perceives sound location specifically Interaural Time Delay (ITD) and it's simplified equation (ITD = a/cθrad+sinθrad). I then implemented a system to take advantage of this effect to through which the device proporionately delays the audio stream from one ear to the other to emulate the time it takes sound to travel from one ear to another. After some fine tuning this system greatly increased the users ability to perceive the location of an object or obstacle.

ㅤ

3.To leverage the aformentioned effect the system relies on good stereo sound and very small latency. These attributes were surprisingly hard to achieve:

ㅤ

4.The project was also intended to have a machine learning camera running and reading out the objects it detects. Sadly the Raspberry pi refused to install the Tensorflow lite dataset and software i had planned no matter what I tried. I attempted a fresh install of Raspbian on a new SD card but this was even worse as the Raspberry pi would not install any software anymore.

ㅤ

5.I also opted to use a raspberry pi thanks to it's computational power, display capabilities, Python support as required in Advanced requirement 1 and eventual camera functionality although i didn't come around to implementing it. This caused a lot of issues because the SD card can become unreliable when swung around on the wrist strap causing the device to crash, I also had many software issues in package installation and compatabilty with the laser sensors.